Beata Saurzernul Photo | Getty Images

YouTube tools can use creator biometrics to remove AI-generated videos that abuse the creator’s likeness. google Experts told CNBC that AI models need to be trained on sensitive data.

In response to concerns from intellectual property experts, YouTube told CNBC that Google has never used creators’ biometric data to train its AI models and is reviewing the language used in the tool’s registration form to avoid confusion. But YouTube told CNBC it has no intention of changing its fundamental policies.

This contradiction highlights broader internal rifts alphabetNow, while Google is aggressively expanding its AI efforts, YouTube is working to maintain trust with the creators and rights holders who rely on the platform for business.

YouTube is expanding its “Similarity Detection” tool it introduced in October. The tool will alert you if a creator’s face is used in a deepfake (a term used to describe fake videos created using AI) without permission. As AI-driven content proliferates across social media, we’re expanding this feature to the millions of creators in the YouTube Partner Program.

The tool scans videos uploaded across YouTube to identify places where a creator’s face may have been altered or generated by artificial intelligence. Creators can then decide whether to request the video to be removed, but the tool requires creators to upload a government ID and facial biometric video. Biometrics are measurements of physical characteristics to confirm a person’s identity.

Experts say that by tying the tool to Google’s privacy policy, YouTube leaves creators’ biometrics open to future abuse. The policy states that public content containing biometric information can be used “to help train Google’s AI models and build products and features.”

“Similarity detection is a completely optional feature, but requires visual reference to function,” YouTube spokesperson Jacques Maron said in a statement to CNBC. “Our approach to that data has not changed. As our Help Center has stated since its launch, the data provided to the similarity detection tool is used only for identity verification purposes and to enhance this specific safety feature.”

YouTube told CNBC that it is “looking at ways to make the language in our products more clear.” The company did not specify what specific changes would be made to the wording or when they would take effect.

Experts remain cautious and said they raised concerns about the policy with YouTube several months ago.

“As Google competes in AI and training data becomes strategic gold, creators need to think carefully about whether they want their faces to be controlled by a platform rather than owned by them,” said Dan Neely, CEO of Vermillio. This allows individuals to protect their likeness from being misused and also facilitates secure licensing of authorized content. “Your likeness will become one of your most valuable assets in the AI era. Once you relinquish control, you may never get it back.”

Vermillio and Loti are third-party companies that work with creators, celebrities, and media companies to monitor and enforce publicity rights on the internet. Advances in AI video generation are increasing its utility to intellectual property owners.

Roti CEO Luke Arrigoni said the risks of YouTube’s current biometrics policy are “huge.”

“With this release, we can attach a name to a biometric of a real face, potentially allowing us to create something more synthetic that resembles that person,” Arrigoni said.

Both Neely and Arrigoni said they are not recommending their clients sign up for similarity detection on YouTube at this time.

Amjad Hanif, YouTube’s director of creator products, said the company built its similarity detection tools to work at “YouTube scale,” where hundreds of hours of new footage are posted every minute. Hanif said the tool will be available to the more than 3 million creators in the YouTube Partner Program by the end of January.

“If creators do well, we do well,” Hanif told CNBC. “We are here as stewards and supporters of the creator ecosystem, which is why we invest in tools that support creators on their ecosystem journey.”

This development comes as the quality and accessibility of AI-generated video tools rapidly improves, raising new concerns for creators whose likeness and voice are central to their businesses.

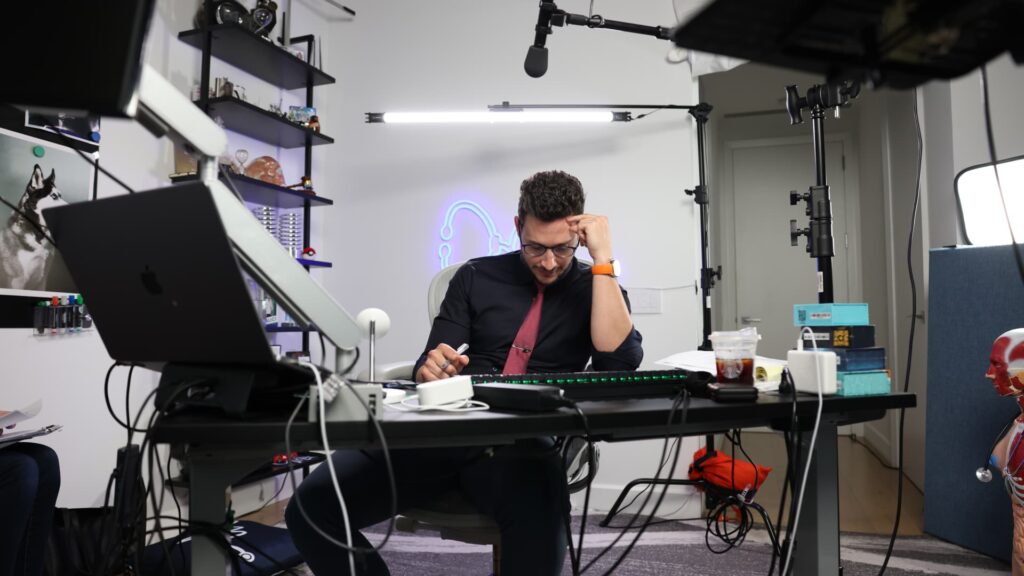

YouTuber Dr. Mike (real name Mikhail Varshavsky) creates videos reacting to TV medical dramas, answering questions about health trends, and debunking myths that have permeated the Internet for nearly a decade.

Dr. Mike

YouTube creator Mikhail Varshavsky, a doctor known as Dr. Mike on the video platform, said he reviews dozens of AI-driven videos a week using the service’s similarity detection tool.

Varshavski has been on YouTube for nearly a decade and has amassed more than 14 million subscribers on the platform. He creates videos reacting to TV medical dramas, answering questions about health trends, and debunking myths. He relies on his credibility as a board-certified physician to inform his audience.

Rapid advances in AI could make it easier for malicious actors to copy his face and voice in deepfake videos, potentially giving viewers misleading medical advice, Varshavsky said.

He first encountered a deepfake of himself on TikTok, where an AI-generated doppelganger was promoting a “miracle” supplement that Varshavsky had never heard of.

“Obviously it surprised me, because I’ve invested over 10 years into earning the trust of my audience, telling them the truth, and helping them make good health care decisions,” he said. “When I saw someone use my likeness to trick someone into buying something they didn’t need or something that could hurt them, that situation scared the crap out of me.”

AI video generation tools like Google’s Veo 3 and OpenAI’s Sora have made creating deepfakes of celebrities and creators like Varshavski much easier. That’s because their similarities are often included in the datasets that technology companies use to train AI models.

Veo 3 is trained on a subset of the more than 20 billion videos uploaded to YouTube, CNBC reported in July. This could include hundreds of hours of video by Mr. Varshavsky.

Varshavsky said deepfakes are “more widespread and proliferating.” “I’ve seen a whole channel created to weaponize these kinds of AI deepfakes, whether it’s to trick people into buying a product or strictly to bully someone.”

Currently, there is no way for creators to monetize unauthorized use of their likeness, unlike the revenue sharing options available through YouTube’s Content ID system for copyrighted material, which is typically used by companies with large copyright catalogs. YouTube’s Hanif said the company is considering how a similar model might work for the use of AI-generated likenesses in the future.

Earlier this year, YouTube gave creators the option to allow third-party AI companies to train on their videos. Hanif said millions of creators participate in the program without the promise of compensation.

Hanif declined to provide accuracy metrics, but said the team is still working to improve the product’s accuracy and that early tests have been successful.

As for takedown activity across the platform, Hanif said that activity remains low, largely because many creators choose not to remove flagged videos.

“They’ll be happy to know it’s there, but they don’t really feel it’s worth removing,” Hanif said. “The most common behavior by far is to say, ‘I saw it, but it’s okay.'”

Representatives and advocacy groups told CNBC that the low number of takedowns is likely due to confusion and lack of awareness rather than comfort with AI content.

WATCH: AI story is shifting to Google with complete stack, says Plexo Capital’s Lo Toney