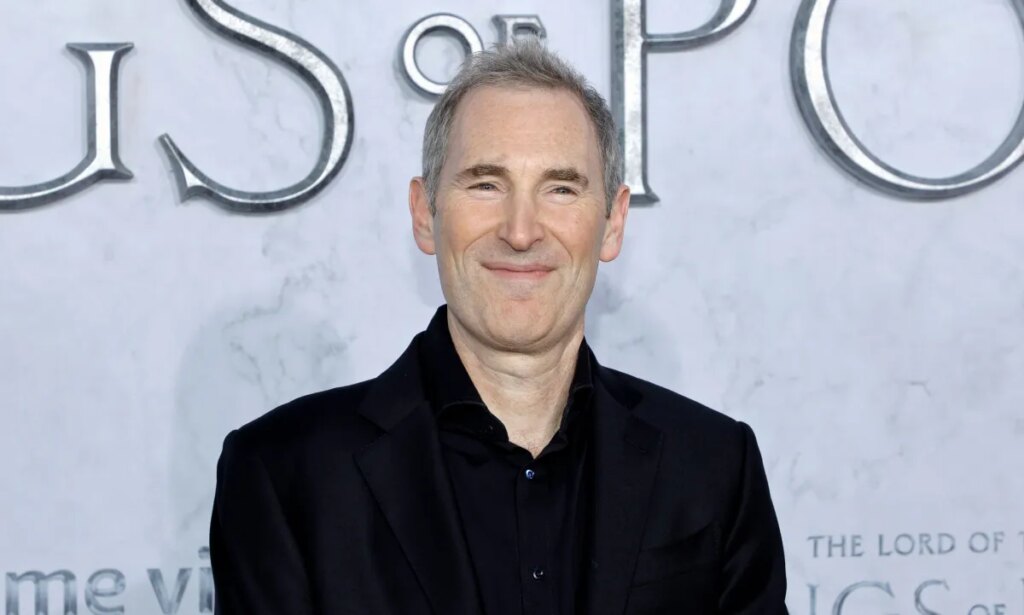

Can any company, large or small, really reverse the dominance of Nvidia’s AI chips? Probably not. But if you can peel away some of it yourself, you could make hundreds of billions of dollars in revenue, Amazon CEO Andy Jassy said this week.

As expected, the company announced its next-generation AI chip, Trainium3, an AI chip that competes with Nvidia, at the AWS re:Invent conference. This is 4x faster and consumes less power than the current Trainium2. Jassy reveals some trivia about Trainium today in a post on X, showing why the company is so bullish on the chip.

He said the Trainium2 business has “significant traction, is a multi-billion dollar revenue run-rate business, with over 1 million chips in production, and over 100,000 companies currently using it as a large part of their Bedrock usage.”

Bedrock is Amazon’s AI app development tool that allows businesses to choose from a large number of AI models.

Jassy said Amazon’s AI chips are popular among the company’s vast cloud customers because of their “compelling price-performance ratio compared to other GPU options.” In other words, he believes it will perform better and cost less than “other GPUs” on the market.

That, of course, is Amazon’s classic MO of offering homegrown technology at a low price.

Additionally, in an interview with CRN, AWS CEO Matt Garman provided further insight into the one customer responsible for the majority of the company’s billions of dollars in revenue. This is not surprising. It’s Anthropic.

tech crunch event

san francisco

|

October 13-15, 2026

“We’ve seen significant traction with Trainium2, especially from our partners at Anthropic who announced Project Rainier, where over 500,000 Trainium2 chips are helping build Claude’s next-generation model,” Garman said.

Project Rainier is Amazon’s most ambitious server AI cluster, distributed across multiple data centers in the United States and built to meet Anthropic’s rapidly growing needs. It went online in October. Of course, Amazon is a major investor in Anthropic. Instead, Anthropic has made AWS its primary model training partner. However, Anthropic is now also available in Microsoft’s cloud via Nvidia’s chips.

OpenAI currently uses AWS in addition to Microsoft’s cloud. However, the cloud giant said the partnership with OpenAI could not significantly contribute to Trainium’s revenue because AWS runs Trainium on Nvidia chips and systems.

In fact, only a few U.S. companies, such as Google, Microsoft, Amazon, and Meta, have all the engineering elements, including silicon chip design expertise and homegrown high-speed interconnects. and networking technology — even trying to truly compete with Nvidia. (Recall that in 2019, when Nvidia cornered the market on one key high-performance networking technology, CEO Jensen Huang outdid Intel and Microsoft to acquire InfiniBand hardware maker Mellanox.)

Additionally, the AI models and software built to be delivered on Nvidia’s chips also rely on Nvidia’s proprietary Compute Unified Device Architecture (CUDA) software. CUDA allows apps to use GPUs for parallel computing, among other tasks. Similar to yesterday’s Intel vs. SPARC chip war, rewriting AI apps for non-CUDA chips is no small feat.

Still, Amazon may have a plan for that. As we previously reported, the next generation AI chip Trainium4 will be built to interoperate with Nvidia’s GPUs within the same system. Whether that helps take more business away from Nvidia or simply strengthens Nvidia’s dominance on AWS’s cloud remains to be seen.

It may not matter to Amazon. It’s already on track to make billions with its Trainium2 chip, and if the next generation is even better, it could very well be a winner.

Catch the latest announcements on everything from agent AI to cloud infrastructure to security and more from Amazon Web Services’ flagship event in Las Vegas. This video is provided in partnership with AWS.