An artificial intelligence-generated video purporting to show Venezuelans celebrating in the streets has gone viral on social media following the U.S. military operation in the country that led to the removal of Venezuelan leader Nicolás Maduro.

These AI clips depicting crowds celebrating have garnered millions of views on platforms like TikTok, Instagram, and X.

One of the earliest and most widely shared clips on X was posted by an account named “Wall Street Apes” with over 1 million followers on the platform.

The post shows a crowd crying and celebrating in the street, thanking the United States and President Donald Trump for removing Maduro.

The video was then reported by Community Notes. Community Notes is a crowdsourced fact-checking feature in X that allows users to add context to posts they believe to be misleading. “This video has been generated by AI and is currently being presented as a statement of fact intended to mislead the public,” the notice said.

The clip was viewed more than 5.6 million times and reshared by at least 38,000 accounts, including business mogul Elon Musk, but the repost was eventually deleted.

CNBC was unable to confirm the source of the video, but fact-checkers at the BBC and AFP said the earliest known version of the clip appeared on the TikTok account @curiousmindusa, which regularly posts AI-generated content.

Even before these videos emerged, and before the Trump administration released real images of Maduro in custody, AI-generated images showing Maduro in custody in the United States were circulating.

The ousted Venezuelan leader was captured on January 3, 2026, after US forces carried out airstrikes and ground raids, an operation that dominated global headlines early in the new year.

In addition to the AI-generated video, AFP’s fact-checking team also identified numerous examples of misleading content about Maduro’s ouster, including footage of a US celebration incorrectly presented as a scene from Venezuela.

Misinformation from major news events is nothing new. Similar false or misleading content was circulated during the Israeli-Palestinian and Russian-Ukrainian conflicts.

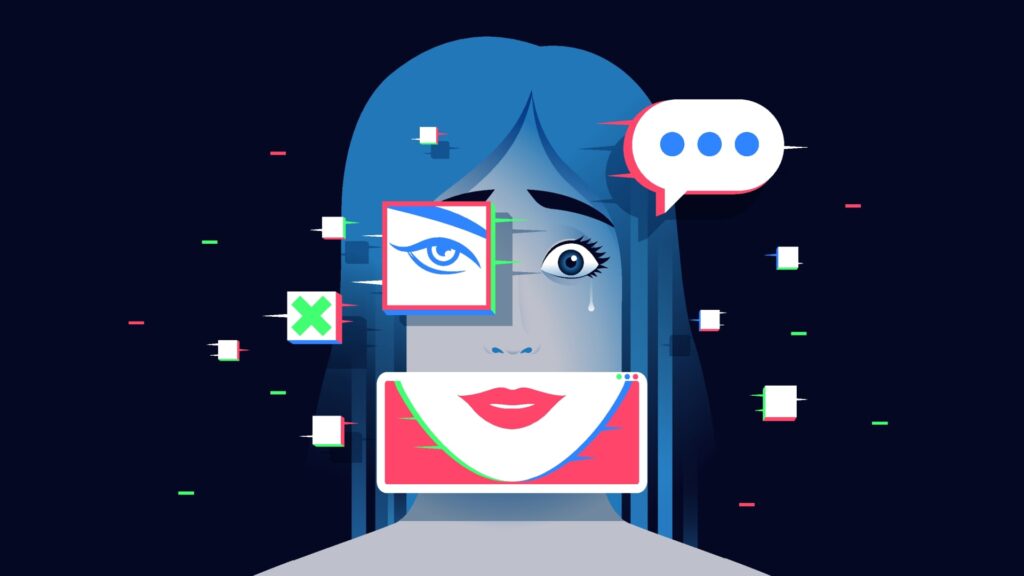

However, the widespread reach and realism of AI-generated content related to recent developments in Venezuela is a clear example of how AI is evolving as a tool for misinformation.

Platforms like Sora and Midjourney have made it easier than ever to quickly generate hyper-realistic footage and make it look authentic amidst the chaos of breaking news events.

Such deepfakes are often aimed at amplifying a particular political narrative or causing confusion among audiences around the world.

Last year, AI-generated videos of women complaining about losing Supplemental Nutrition Assistance Program (SNAP) benefits during the government shutdown also went viral.

One such AI-generated video fooled Fox News into appearing authentic in an article, but it was later removed.

Social media companies are under increasing pressure to step up their efforts to label this type of AI-generated content.

Last year, the Indian government proposed legislation to require such labeling, and Spain approved fines of up to €35 million for unlabeled AI material.

Major platforms such as TikTok and Meta are rolling out AI detection and labeling tools X, but results appear to be mixed.

CNBC identified several videos on TikTok purporting to celebrate Venezuela that were labeled as AI-generated.

In the case of X, it relies primarily on community notes, and critics of the system say it is often too slow to react to prevent falsehoods from spreading before they are labeled.

Adam Mosseri, who oversees Instagram and Threads, acknowledged the challenge in a recent post. “All major platforms will do a good job of identifying AI content, but their ability to do so will decline over time as AI gets better at mimicking reality,” he said.

“There are already a growing number of people who, like me, believe that it is more practical to fingerprint real media than fake media,” he added.

— CNBC’s Victoria Yeo contributed to this report