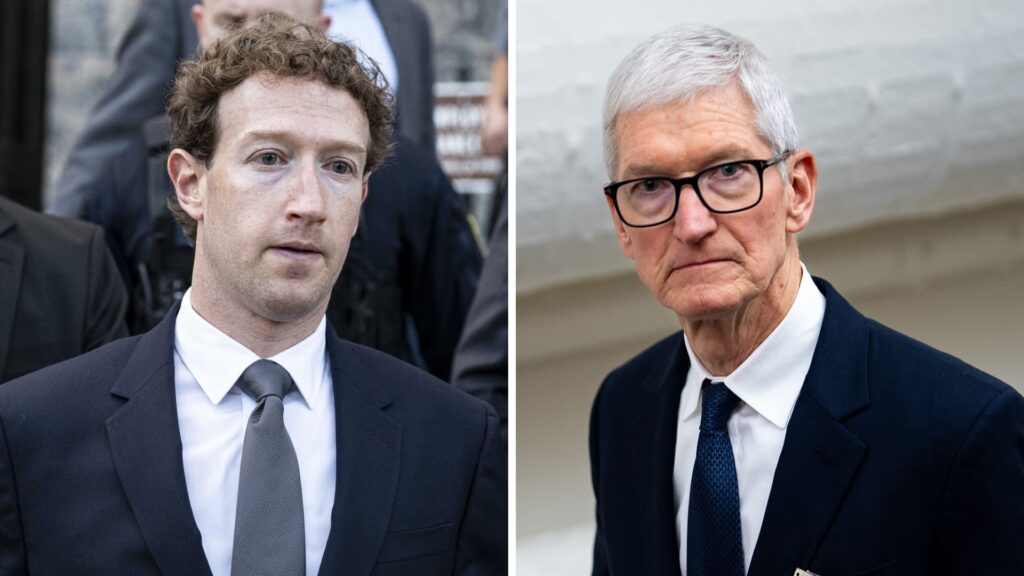

Meta CEO. Mark Zuckerberg and Apple CEO Tim Cook.

John Putman | Anadolu | Al Drago | Bloomberg | Getty Images

Teck’s child safety record is under the microscope.

This week, in legal proceedings across California, New Mexico and West Virginia. meta CEO Mark Zuckerberg and apple CEO Tim Cook faces questions about privacy, freedom of expression and safety. This is an issue that tech giants consider with every feature release.

If both companies are found liable in their respective cases, courts could force unprecedented product changes that affect billions of people.

Zuckerberg defended his decision Wednesday in a Los Angeles courtroom when pressed by lawyers on why he allowed beauty filters on Instagram and whether the company’s efforts to grow its business outweighed concerns about young people’s mental health.

Internal messages in Mr. Mehta’s New Mexico lawsuit show employees discussing the roughly 7.5 million annual child sexual abuse reports that will no longer be made public after Mr. Zuckerberg’s decision, announced in 2019, to make Facebook Messenger end-to-end encrypted by default.

Those messages were revealed in newly unsealed legal filings by the state of New Mexico released this week.

“CSER (Community Standards Enforcement Report) numbers will be next year,” the employee wrote in a message dated Dec. 14, 2023, according to the filing. That same month, Meta announced in a public blog post that it would begin “rolling out default end-to-end encryption for personal messages and calls in Messenger and Facebook.”

The employee said fewer child exploitation reports were being sent, adding that the company was like “putting down a big rug to cover a rock,” according to the filing.

“We care about the health of the teens and children who use our services,” Zuckerberg said Wednesday in Los Angeles when asked about his email exchange with Cook.

The state of West Virginia filed a lawsuit Thursday against the iPhone maker over its handling of child sexual abuse material (CSAM).

Opening arguments in the New Mexico lawsuit filed by Attorney General Raul Torres began on February 9th. Mr. Zuckerberg is not scheduled to testify during the trial.

Torrez alleges that Meta failed to adequately protect apps like Instagram and Facebook from online predators and made misleading statements about the overall safety of its platforms.

“Meta knew that E2EE would make its platform less secure by interfering with the detection and reporting of child sexual exploitation and the solicitation and distribution of child exploitation images sent in encrypted messages,” the lawyers said in the filing. “Meta also recognized that safety mitigation measures were insufficient to address the risks.”

E2EE stands for End-to-End Encryption.

In response to the public filing, the social media giant said it continues to develop safety tools and features that allow it to review and address private encrypted messages reported for child safety-related issues.

Meta previously disputed the New Mexico AG’s claims, saying it was “focused on demonstrating our long-standing commitment to supporting youth.”

New Mexico court documents show several internal memos expressing major concerns about the encryption change and its impact on the company’s ability to detect and report CSAM and other harmful messages.

“Without strong mitigation measures, E2EE on Messenger means our ability to prevent harm to children is significantly reduced,” Meta Global Affairs senior staff said in a February 25, 2019 memo.

Another internal document from June 2019 said, “If security systems can recognize the messages themselves, they will never be able to discover all the potential harm currently being done on Messenger.”

Privacy advocates have praised encryption’s ability to effectively scramble messages so that third parties cannot overhear people’s conversations, but various law enforcement officials say encryption hinders their ability to investigate certain crimes.

After Meta completed its work encrypting Facebook Messenger, lawyers wrote in a filing, “the fears conveyed to even law enforcement agencies and their personnel have become clear.”

Mark Zuckerberg, CEO of Meta Platforms, attends a trial in Los Angeles Superior Court in a landmark test case accusing Meta and Google’s YouTube of harming the mental health of children through their addictive platforms, in Los Angeles, California, U.S., February 18, 2026.

Mona Edwards | Reuters

alphabetYouTube, owned by , is also a defendant in the Los Angeles lawsuit, while TikTok and snap A settlement was reached with the plaintiffs before the trial began in January, and they are no longer involved.

Apple also faces questions about encryption and privacy.

West Virginia Attorney General John “JB” McCaskey argued in a lawsuit filed Thursday that the company failed to prevent CSAM from being stored and shared on iOS devices and iCloud services.

Similar to the meth allegations in New Mexico, McCaskey cited Apple’s encryption practices as hindering law enforcement.

“Fundamentally, E2E encryption poses a barrier to law enforcement, including identifying and prosecuting CSAM violators and abusers,” lawyers wrote in Apple’s legal filing.

“Protecting the safety and privacy of our users, especially children, is at the heart of what we do,” Apple said in a statement.

The lawsuits against the companies and the exchange between Mr. Cook and Mr. Zuckerberg about child safety raise further questions about what obligations the companies have to users and others on their platforms.

“I thought there was an opportunity for us and Apple to do this, and I wanted to talk to Tim about it,” Zuckerberg said of his email with Cook.

As lawsuits and cases progress, more will be revealed about decisions that affect billions of people around the world.